Databricks is no longer just a tool for running Spark in notebooks. In 2025, it’s evolved into a unified lakehouse platform that spans the full lifecycle of data and AI: ingestion, transformation, cataloging, governance, analytics, and with Mosaic AI, even generative AI applications in production.

This post walks through what Databricks really offers today, how it’s applied in real-world data architectures, what practices actually work at scale, and why so many companies are consolidating their fragmented data stacks into a single, governed platform.

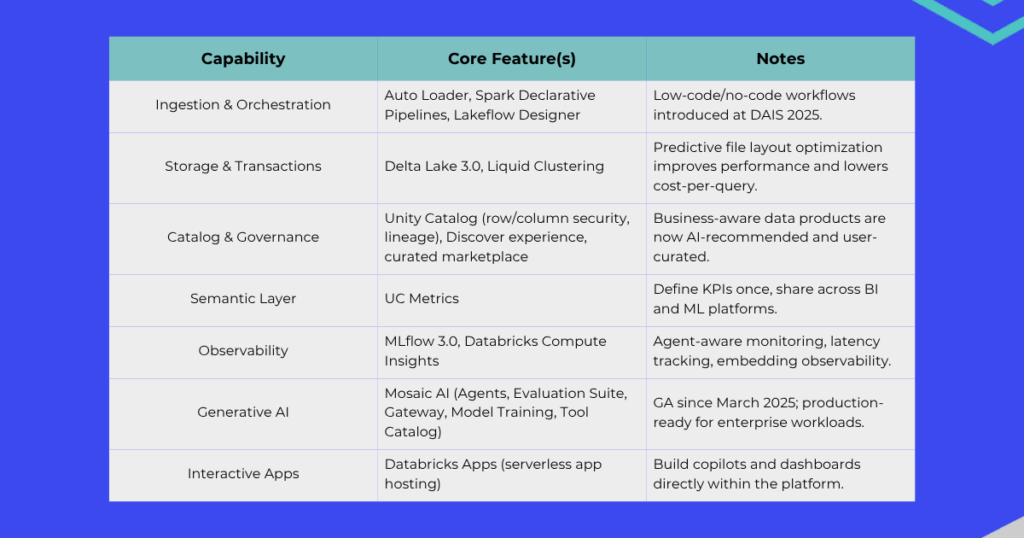

What Databricks offers end-to-end

Databricks has evolved well beyond “a platform to run Spark.” It now delivers vertically integrated capabilities across the entire data lifecycle: from data ingestion and storage to processing, analytics, machine learning, and governance. Its native Delta Lake technology ensures data reliability with ACID transactions and version control, making it easy to manage large, complex datasets in cloud environments.

The platform provides a collaborative workspace where data engineers, analysts, and data scientists can work together using multiple languages like SQL and Python. With optimized Spark performance and seamless integration with BI tools, Databricks accelerates the transformation of raw data into actionable insights. Its built-in machine learning lifecycle management, powered by MLflow, helps teams develop, track, and deploy models efficiently.

Beyond development, Databricks supports operationalization and monitoring to ensure models run reliably in production. It also offers strong security, compliance, and governance features, including role-based access and data encryption. This makes Databricks a unified solution that empowers organizations to unlock the full value of their data, from ingestion to trusted, scalable AI-driven applications.

Ok, but what’s new in 2025?

Well, this Databricks has doubled down on unifying operational and analytical workloads:

Lakebase – A serverless Postgres engine embedded in the lakehouse. Ideal for low-latency transactional queries.

Agent Bricks – Auto-optimizing, production-ready agents for generative AI pipelines.

Lakebridge – A guided migration framework for teams transitioning from legacy warehouses.

Neon Acquisition – Databricks acquired Postgres scale-out startup Neon for $1B, reinforcing its hybrid OLTP + OLAP architecture and signaling a more direct challenge to Snowflake.

These additions make it possible to combine structured, semi-structured, and AI-native data within a single environment, governed by Unity Catalog and powered by Photon, Delta, and Mosaic AI.

Reference Architecture for modern data products

Databricks enables data teams to go from raw ingestion to real-time AI apps, all in a single platform. It combines the power of a data lake and a data warehouse, allowing organizations to unify their data without the complexity of managing multiple systems. Data engineers can ingest large volumes of raw data from various sources, such as streaming logs, databases, or APIs, directly into the Lakehouse, creating a centralized foundation for all analytics and AI workflows.

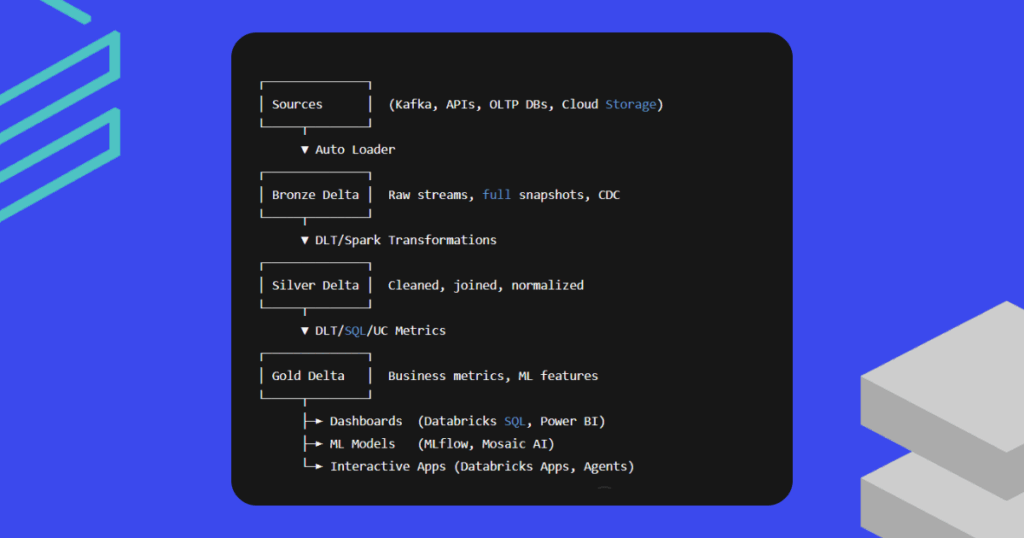

A typical data pipeline in Databricks follows a structured approach: data flows from various sources (Kafka, APIs, OLTP databases, cloud storage) through the Auto Loader into the Bronze Delta layer, which stores raw streams, full snapshots, and change data capture (CDC). From there, Delta Live Tables (DLT) or Spark transformations clean, join, and normalize the data into the Silver Delta layer. Finally, business metrics and machine learning features are generated in the Gold Delta layer using DLT, SQL, or Unity Catalog metrics. This Gold layer then feeds multiple outputs, including dashboards (Databricks SQL, Power BI), ML models (MLflow, Mosaic AI), and interactive applications (Databricks Apps, Agents):

From this unified data layer, data scientists and analysts collaborate using interactive notebooks with support for languages like Python, SQL, Scala, and R. The Delta Lake technology ensures reliable, ACID-compliant transactions that maintain data integrity and consistency. Databricks also simplifies the entire machine learning lifecycle with managed tools like MLflow, enabling teams to train, experiment, and deploy AI models that scale seamlessly and integrate into real-time applications or dashboards for instant insights.

You may be wondering why this works based on the image above, and it’s actually quite simple. Basically we have:

Bronze layer serves as immutable source-of-truth storage, keeping raw ingested data for replayability and audits.

Silver layer contains cleaned and lightly transformed data, often shared across pipelines.

Gold layer delivers business-ready metrics and ML features that are curated and performance-tuned.

All layers are stored in Delta Lake format, enabling ACID transactions, schema evolution, and change tracking. Access control and metadata are enforced through Unity Catalog, while DLT pipelines provide lineage, observability, and data quality enforcement out of the box.

A practical example of this architecture in action comes from a global fintech platform we supported. In this case, credit card events were streamed in real time into the Silver layer, where they were enriched with customer metadata. These enriched features were then fed into a machine learning model deployed using Mosaic AI Agents for real-time fraud detection. Meanwhile, key metrics were made available to business intelligence analysts through Databricks SQL in the Gold layer, enabling comprehensive insights and faster decision-making.

Practices that actually scale

Not every “best practice” survives contact with real data teams. Here’s what we’ve learned actually scales well in practice:

Starting governance from Day One is crucial. Many teams tend to postpone governance setup until it becomes an urgent issue, but by then, it’s often too late. With tools like Unity Catalog, tagging data assets early, such as marking personally identifiable information (PII), financial data, or regional datasets right at ingestion, makes a huge difference. This approach allows the use of attribute-based access control (ABAC) to dynamically filter or mask data depending on user roles or regions, preventing compliance incidents simply because the right column tags were in place from the start.

Switching to declarative pipelines (DLT) transforms how data workflows are managed. Traditional DAG orchestration tools like Airflow or Prefect require significant manual effort to implement retries, quality checks, and lineage tracking. In contrast, DLT lets you write a simple SQL statement or Python function, and the platform automatically infers the execution graph, handles monitoring, and natively supports expectations. This drastically reduces pipeline complexity while providing enhanced observability that would be challenging to build independently.

Leveraging Unity Catalog Metrics is another game changer. Defining key performance indicators (KPIs) once and using them consistently across BI dashboards, machine learning features, and experimentation reports resolves the common frustration of different teams having conflicting definitions of metrics like “revenue” or “active users.” This unified approach streamlines communication and ensures everyone is working from the same data foundation.

Adopting Mosaic AI Evaluation early in the process safeguards AI feature reliability. AI models often fail unpredictably, especially when integrating generative AI features. Mosaic’s Evaluation Suite benchmarks critical aspects such as prompt cost, latency, and hallucination risk before deployment. Treating each prompt like a unit test and holding every data source accountable as part of your reliability contract helps catch issues early, preventing a chatbot from providing incorrect answers in edge cases.

Finally, monitoring what truly matters is essential. MLflow 3.0 goes beyond simple model versioning; it enables continuous monitoring of live traffic to AI agents, tracking metrics like response latency, embedding drift, and hallucination scores. This closes the feedback loop between data ingestion and AI application performance, ensuring that models remain accurate and reliable in production environments.

Why does any of this matter in 2025?

In 2025, the role of the data team has fundamentally evolved. We’re not just “shipping tables” or “training models” anymore. We’re building complete data and AI products. These products need to be secure, scalable, observable, and truly integrated into the business.

Here’s what makes Databricks stand out in this new environment:

Open by design

Delta, Parquet, Unity Catalog, and Spark keep your stack portable and standards-based. No vendor lock-in. No hidden traps.Truly unified platform

Instead of patching together compute, storage, cataloging, vector databases, orchestration, and monitoring, Databricks brings everything into one place. One architecture. One control plane.AI-native infrastructure

Unlike traditional data warehouses, Databricks was built with machine learning and generative AI in mind. With Mosaic AI, you get practical tools to embed intelligence into your products. This includes embedding storage, vector search, orchestration, guardrails, evaluation tools, and app hosting.Serverless with accountability

You can scale compute resources up or down without losing governance. From SQL dashboards to LLM agents, every asset is traceable, performance, cost, and access are all visible in one interface.

In this new landscape, success is no longer about collecting the most data. It’s about moving fast, trusting your outputs, and delivering real value. Databricks enables that shift, from quantity to velocity, trust, and business outcomes.

At BIX Tech, helping organizations achieve this transformation is our focus. Want to get started today? Click here and schedule an appointment with one of our experts!

FAQ & What to do next

Is Databricks only for big enterprises?

Not at all. Many lean teams operate entirely on a single workspace with serverless SQL and still build high-impact solutions. Its modular structure allows you to start small and scale when ready.

What skills do I need to use Databricks effectively?

Basic SQL is more than enough to get started. Python is helpful for transformations and machine learning, but Spark complexity is abstracted away by tools like Databricks SQL and Delta Live Tables.

Can I run GenAI apps in production on Databricks?

Absolutely. Mosaic AI delivers all the necessary components: vector storage, embedding, orchestration, guardrails, evaluation tools, and production-ready hosting.

What if I already use Snowflake or BigQuery?

You don’t need to migrate everything. Databricks’ data federation lets you query Snowflake, BigQuery, Oracle, and Iceberg natively. You only move what’s necessary.

How secure is Unity Catalog?

Unity Catalog enables layered governance with workspace isolation, RBAC, ABAC, column masking, row filtering, and detailed audit logs. It integrates with enterprise identity providers like Okta and Azure AD.

Is Databricks meant to replace my BI tool?

No. It can serve as your full-stack analytics engine, but it also integrates seamlessly with Tableau, Power BI, Looker, and other tools. Databricks SQL provides robust dashboarding without forcing a migration.

Whether you’re solving a single use case or replacing an outdated legacy platform, the key to success with Databricks is designing with scale and governance in mind from the start.

Our team has helped clients ranging from startups to large enterprises unlock the full value of Databricks. We support data warehouse migrations, real-time analytics, MLOps pipelines, and AI co-pilots in production.

If you’re evaluating Databricks or just want a second opinion on your current setup, we’re here to help.

Click the banner bellow and let’s talk today!