Mastering Retrieval-Augmented Generation (RAG) for Next-Level AI Solutions

Introducing the cutting-edge technique in generative AI: Retrieval-Augmented Generation (RAG). To grasp its essence, envision a scenario within a hospital room.

In the realm of medical practice, doctors leverage their extensive knowledge and expertise to diagnose and treat patients.

Yet, in the face of intricate medical conditions requiring specialized insights, doctors often consult academic literature or delegate research tasks to medical assistants. This ensures the compilation of relevant treatment protocols to augment their decision-making capabilities.

In the domain of generative AI, the role of the medical assistant is played by the process known as RAG.

So, what exactly is RAG?

RAG stands for Retrieval-Augmented Generation, a methodology designed to enhance the accuracy and reliability of Large Language Models (LLMs) by expanding their knowledge through external data sources. While LLMs, neural networks trained on massive datasets, possess the ability to generate prompts swiftly with billions of parameters, they falter when tasked with delving into specific topics or current facts.

This is where RAG comes into play, enabling LLMs to extend their robust expertise without necessitating the training of a new neural network for each specific task. It emerges as a compelling and efficient alternative to generate more dependable prompts.

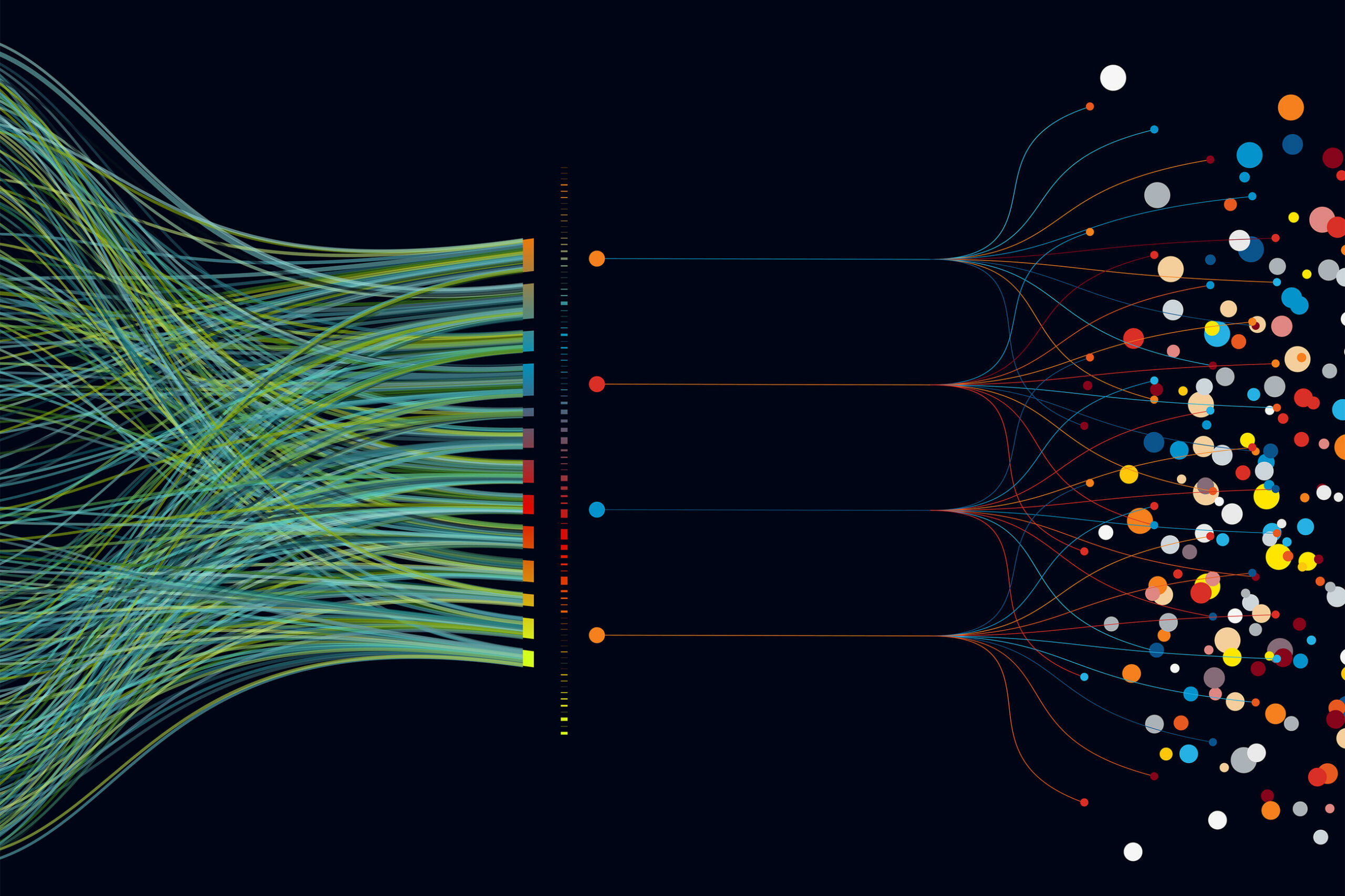

Image developed with Artificial Intelligence.

Why is RAG pivotal?

In tasks that are both complex and knowledge-intensive, general LLMs may see a decline in performance, leading to the provision of false information, outdated guidance, or answers grounded in unreliable references. This degradation stems from intrinsic characteristics of LLMs, including reliance on past information, lack of updated knowledge, non-helpful model explainability, and a training regimen based on general data that overlooks specific business processes.

These challenges, coupled with the substantial computing power required for model development and training, make the utilization of a general model in certain applications, like chatbots, a potential detriment to user trust. A case in point is the recent incident involving the AirCanada chatbot, which furnished a customer with inaccurate information, ultimately misleading them into purchasing a full-price ticket.

RAG presents a viable solution to these issues by fine-tuning pre-trained LLMs with authoritative data sources. This approach offers organizations enhanced control and instills trust in the generated responses, mitigating the risks associated with the misuse of generative AI technology.

What are the practical applications of RAG?

RAG models have demonstrated reliability and versatility across various knowledge domains. In practical terms, any technical material, policy manual, or report can be leveraged to enhance Large Language Models (LLMs). This broad applicability positions RAG as a valuable asset for a diverse range of business markets. Some of its most conventional applications include:

- Chatbots: Facilitating customer assistance by providing personalized and more accurate answers tailored to the specific business context.

- Content generation: Offering capabilities such as text summarization, article generation, and personalized analysis of lengthy documents.

- Information research: Improving the performance of search engines by efficiently retrieving relevant knowledge or documents based on user prompts.

Image developed with Artificial Intelligence.

How does RAG work?

RAG operates through the fine-tuning of a pre-trained model. Fine-tuning, a transfer learning approach, involves training the weights of a pre-trained model on new data. This process can be applied to the entire neural network or a subset of its layers, with the option to “freeze” layers that are not being fine-tuned.

The implementation of RAG is relatively straightforward, with the coauthors suggesting that it can be achieved with just five lines of code. The fine-tuning process typically involves four main steps:

- Create external data and prepare the training dataset: Collect data from various sources, such as files, database records, or long-form text. Manipulate the data to fit the model’s data ingestion format.

- Train a new fine-tuned model: After ensuring the data is appropriately formatted, proceed with the fine-tuning process. The duration of model training can vary from minutes to hours, depending on the dataset size and available computational power.

- Model validation: Evaluate the training metrics, including training loss and accuracy, to validate the model. Generate samples from the baseline model and fine-tune it for comparison. If performance is suboptimal, iterate over data quality, quantity, and model hyperparameters.

- Model deployment and utilization: Once validated, deploy the model for real-world tasks, ensuring integration with the system is reliable, safe, and scalable. Continuous monitoring is crucial to assess system performance and responsiveness.

To develop your own RAG model, you can follow a step-by-step tutorial on fine-tuning the GPT-3.5 provided by OpenAI.

Ready to explore the limitless possibilities of Retrieval-Augmented Generation (RAG) with our team of experts?

Let’s delve deeper into how RAG can transform your business and elevate your AI capabilities.

Connect with our specialists and unlock the full potential of generative AI tailored to your unique needs. Let innovation guide your journey – reach out to us now!

Article written by Murillo Stein, data scientist at BIX.

Don't miss any of our content

Sign up for our newsletter

Our Social Media

Most Popular

Didn’t find what you were looking for?

Get in touch with our team (786) 558-1665 or send an email to [email protected]. If you prefer, click here and schedule an appointment.